The article ChatGPT Health: Misdiagnoses in a premium guise first appeared in the online magazine BASIC thinking. With our newsletter UPDATE you can start the day well informed every morning.

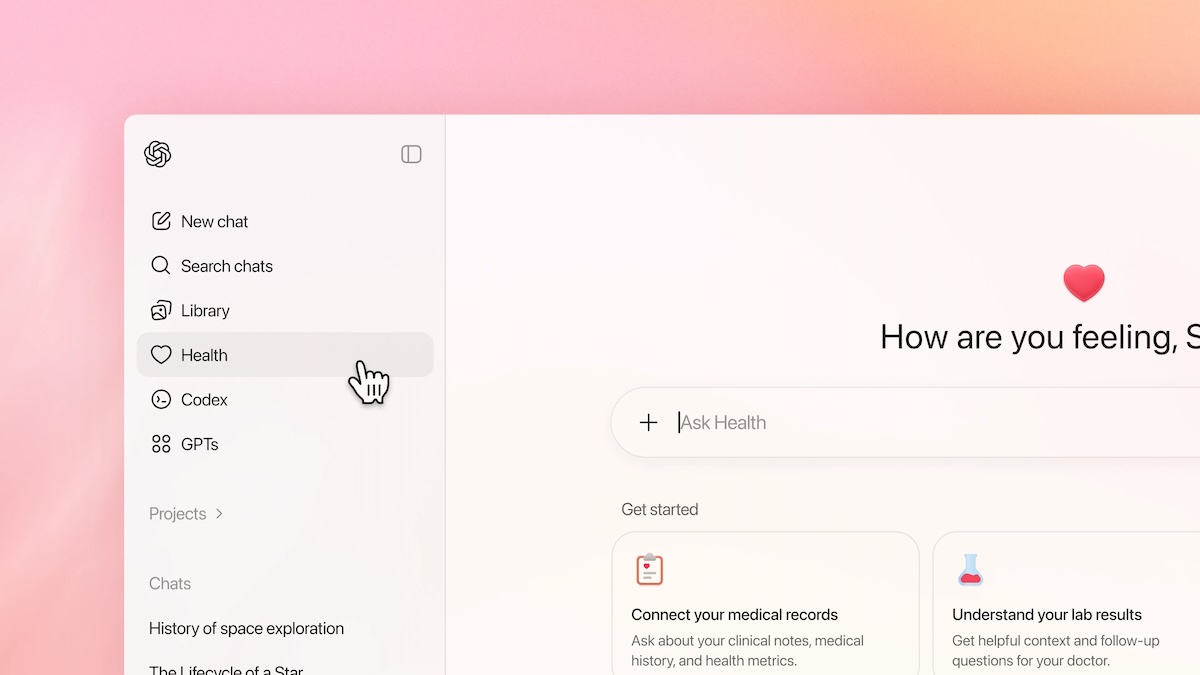

OpenAI adds a new Health tab to ChatGPT, allowing users to upload electronic medical records and connect to health and fitness apps. However, reactions to ChatGPT Health have been mixed. A commentary analysis.

What is ChatGPT Health?

- ChatGPT Health is a specially isolated area within the chatbot. Users should be able to have conversations about health topics without sensitive data getting mixed up with regular chat processes. According to OpenAI Health can evaluate health data and go beyond general advice to determine when medical attention is required.

- The system was developed with over 260 doctors to analyze answers to health questions and ensure that ChatGPT provides medically plausible and correct answers. The function can be used with Fitness and health data from apps like Apple Health, Weight Watchers or Peloton. The integration of electronic patient records is initially only possible in the USA.

- According to OpenAI, millions of users consult ChatGPT about health issues every week. The problem: AI chatbots keep spitting due to so-called hallucinations nonsensical to completely wrong answers out of. When it comes to health issues, this can have devastating consequences, as Google recently demonstrated again.

OpenAI shifts responsibility to users

OpenAI emphasizes that ChatGPT Health, despite its close collaboration with doctors do not make diagnoses or recommend treatments may. The tool is officially only intended to provide information and preparation for doctor’s visits.

This is particularly legally relevant, as OpenAI has had ChatGPT Health classified as a consumer product in the USA. This means that the function does not fall under the strict data protection laws for the healthcare sector. The releases OpenAI from certain liability risks and shifts responsibility primarily to the users.

This would certainly not be possible within the EU – and that’s a good thing. Because despite improvements and medical analysis, misinformation in the form of AI hallucinations are by no means completely ruled out. There are also concerns about data protection.

OpenAI does offer purpose-built encryption, but just that no end-to-end encryptionas is common with messenger services. Specifically, this means that there is protection against unauthorized access. However, OpenAI can view the data.

Voices

- Fidji Simo, CEO of Applications at OpenAIin one Blog post: “ChatGPT Health is another step in making ChatGPT a personal super assistant, providing you with information and tools to help you achieve your goals in all areas of your life. We are still at the very beginning of this journey, but I look forward to making these tools available to more people.”

- OpenAI boss Sam Altman already explained in the summer told CNBC: “Healthcare is the area where we’ve seen the biggest improvements. It’s a big part of ChatGPT usage. I think it’s really important to give people better information about their healthcare and empower them to make better decisions.”

- Author and journalist Aidan Moher mocks in one Post on Bluesky: “What could go wrong when an LLM trained to confirm, support and promote user biases encounters a hypochondriac with a headache?”

A health advisor that ChatGPT can never be

Since millions of users continue to consult ChatGPT on health issues despite unanimous warnings, it is first of all welcome that OpenAI has taken on the topic Improve chatbot.

But the company also suggests one Health advisor that ChatGPT can never be. External education of users will therefore be just as crucial as the question of whether the chatbot actually spits out enough warnings in the right places and how it deals with highly sensitive health topics.

That one Processing of medical patient data is not possible in the EU is, points to regulatory hurdles. Because: In Germany, health data is fortunately subject to strict processing requirements. Presumably, OpenAI will use such data in the USA to serve personalized advertising. Complete data protection is not guaranteed.

Also interesting:

- Grok: Elon Musk industrializes sexual harassment

- Life-threatening: Google AI spreads medical misinformation

- Repairing electric car batteries: Less tension in your wallet

- AI, e-car bonus, Germany ticket: What will change in 2026

The post ChatGPT Health: Misdiagnoses in a premium guise appeared first on BASIC thinking. Follow us too Google News and Flipboard or subscribe to our newsletter UPDATE.

As a Tech Industry expert, I have some concerns about ChatGPT Health and the potential for misdiagnoses in a premium guise. While the idea of using AI to provide health information and advice is innovative, there are significant risks involved when it comes to accuracy and reliability.

AI models like ChatGPT are not medical professionals and lack the nuanced understanding and experience that human doctors have. This can lead to misinterpretation of symptoms, incorrect diagnoses, and potentially harmful advice being given to users.

Furthermore, the premium guise of ChatGPT Health may give users a false sense of security, leading them to rely on the AI model for medical advice without seeking a second opinion from a qualified healthcare professional.

It is crucial that companies developing AI-powered healthcare platforms like ChatGPT Health prioritize accuracy, transparency, and user safety above all else. Ensuring that the AI model is regularly updated with the latest medical information, providing clear disclaimers about its limitations, and encouraging users to consult with a doctor for a proper diagnosis are just a few ways to mitigate the risks of misdiagnoses in a premium guise.

Credits