The contribution with a break memory against chatt addiction? First appeared at the online magazine Basic Thinking. You can start the day well every morning via our newsletter update.

Openaai introduces a new function at Chatgpt: If you chat too long, you will now get a memory of taking a break. The company reacts to concerns about the effects of intensive AI use-especially when it comes to mental health and addictive behavior after AI contact.

Background: Break memory against Chatgpt addiction

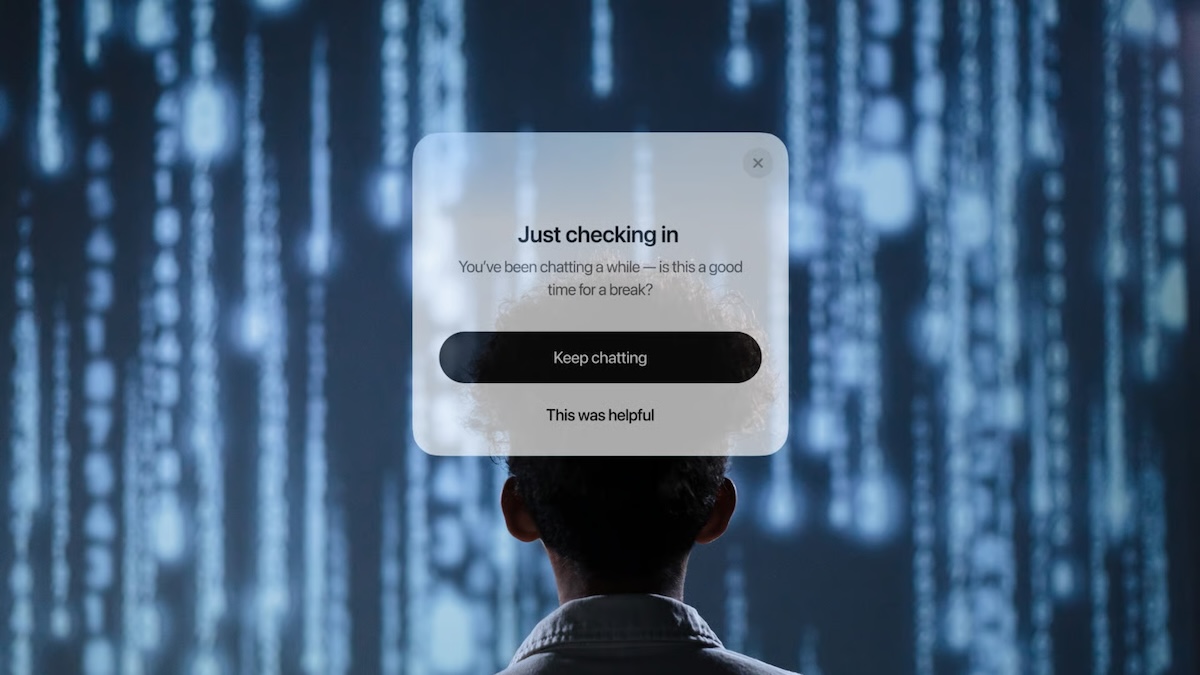

- The new feature shows a pop-up with the message during long sessions: “Just Checking in-You’ve Been Chatting A While-is this a good time for a break?” Users can then either continue to chat or end the session.

- The break in the break is part of a larger initiative of Openai, in which errors are to be corrected. The goal: Chatgpt should be useful and supportive, but do not promote dependency. Openai emphasizes that you do not want to keep users in chat because less time in Chatgpt is a sign that the product works.

- The changes are also a reaction to criticism: Chatgpt was according to your own statements To be approved since the beginning of the year and sometimes even supported problematic thoughts because the answer sounded good but was not really helpful. There were also cases in which users searched for emotional or mental support in AI without the system being appropriate.

Our classification

The break function interrupts the flow if the conversation with the AI becomes too intense or too long. Openaai claims that your own success measurement is not based on the longest possible useful life-a rarity in the tech industry, which usually optimizes to keep users in their own apps as long as possible.

The background is also reports on users who use Chatgpt as a replacement for human contact or as a permanent problem solver. People with psychological problems in particular run the risk of getting lost in endless conversations with the AI. Openaai says that the AI will react more sensitively to signs of emotional hardship and should rather refer to professional help. If you have any questions about important personal decisions, new behavior will soon be introduced.

The problem of hallucinations also remains. The longer the sessions, the greater the risk that users take questionable information for bare coin. The break memory is therefore also an impetus to critically question your own usage behavior and the quality of the answers yourself – with a focus on the word “yourself”.

Voices

- Openai writes: “There were cases in which our 4O model has not recognized signs of delusions or emotional dependency. Although this is rarely found, we continuously improve our models and develop tools to better recognize signs of psychological or emotional stress so that Chatgpt can react appropriately and refer people to evidence-based resources if necessary.”

- Ian Carlos Campbell from “Engadget“:” The system is reminiscent of the memories that show some Nintendo games when you play over a longer period of time, but the chatt function unfortunately has a dark background. The ‘yes, and’ setting of the AI of Openai and its ability to generate factually false or dangerous answers has led users to dark paths. “

- A reddit user reported About experiences with a chat huchs: “Sometimes I am on the road and watch something or have a thought and then think: ‘I will talk about that later with chat.’ (…) Nobody harms that, but I ask myself: Will that be a dependency? “

Outlook: Chatgpt addiction

With the break in the break, Openai is new territory: While other platforms are set up for maximum use time, the activity is actively interrupted here. This could become a model for other AI providers, especially if further reports on problematic usage patterns appear.

It remains to be seen whether these memories actually change usage behavior. Anyone who uses chatt as a constant companion may not be slowed down by a pop-up. Especially since the pop-up could also have an opposite effect if users rely on the fact that they will be warned at some point-and thus continue to depend on it.

In the long term it will be shown how big that is Addiction potential of AI tools Real is and whether technical solutions such as pop-ups are sufficient. The fact is: With all the advantages of AI, we should not unlearn our own, critical thinking.

Also interesting:

- Chatgpt as a therapist: AI knows no confidentiality

- Controversial US software: Palantir monitoring in Germany?

- New format, exclusive content: Update newsletter becomes an independent product

- Irr Ki-Plan: Trump wants to rename artificial intelligence

The contribution with a break memory against chatt addiction? First appeared on Basic Thinking. Follow us too Google News and Flipboard Or subscribe to our update newsletter.

As a Tech Industry expert, I believe the concept of a break memory as a defense against chat hunches is an intriguing idea. Chat hunches refer to the potential bias or misinformation that can arise from relying solely on memory during online conversations or interactions.

By incorporating a break memory system, where users can pause the conversation and verify information before responding, it can help mitigate the risk of spreading false information or making decisions based on incomplete or inaccurate data. This could be particularly useful in situations where quick responses are not necessary, such as in professional settings or when discussing complex topics.

However, it is important to consider the potential downsides of implementing such a system. It could potentially slow down the pace of conversations and hinder the natural flow of communication. Additionally, users may become overly reliant on the break memory system, leading to a lack of critical thinking skills or the ability to think on their feet.

Overall, I think the concept of a break memory system has potential benefits in helping users make more informed decisions and reducing the risk of spreading misinformation. However, it should be implemented thoughtfully and with consideration for the potential drawbacks.

Credits